Twitter’s post-Jan. 6 deplatforming reduced misinfo, study finds – “It wasn’t just a reduction from the de-platformed users themselves, but it reduced circulation on the platform as a whole”

By Will Oremus

6 June 2024

(The Washington Post) – In the week after the Jan. 6, 2021, insurrection, Twitter suspended some 70,000 accounts associated with the right-wing QAnon radicalized movement, citing their role in spreading misinformation that was fueling real-world violence.

A new study finds the move had an immediate and widespread impact on the overall spread of bogus information on the social media site, which has since been purchased by Elon Musk and renamed X.

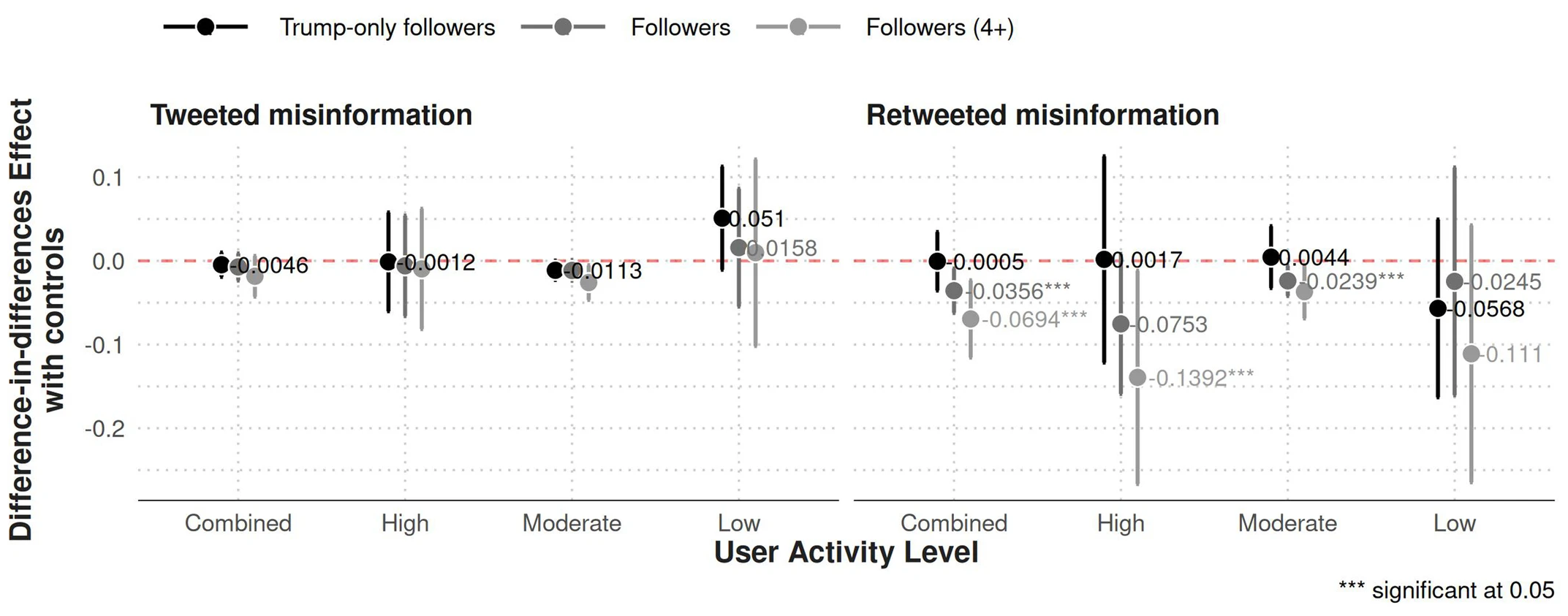

The study, published in the journal Nature on Tuesday, suggests that if social media companies want to reduce misinformation, banning habitual spreaders may be more effective than trying to suppress individual posts.

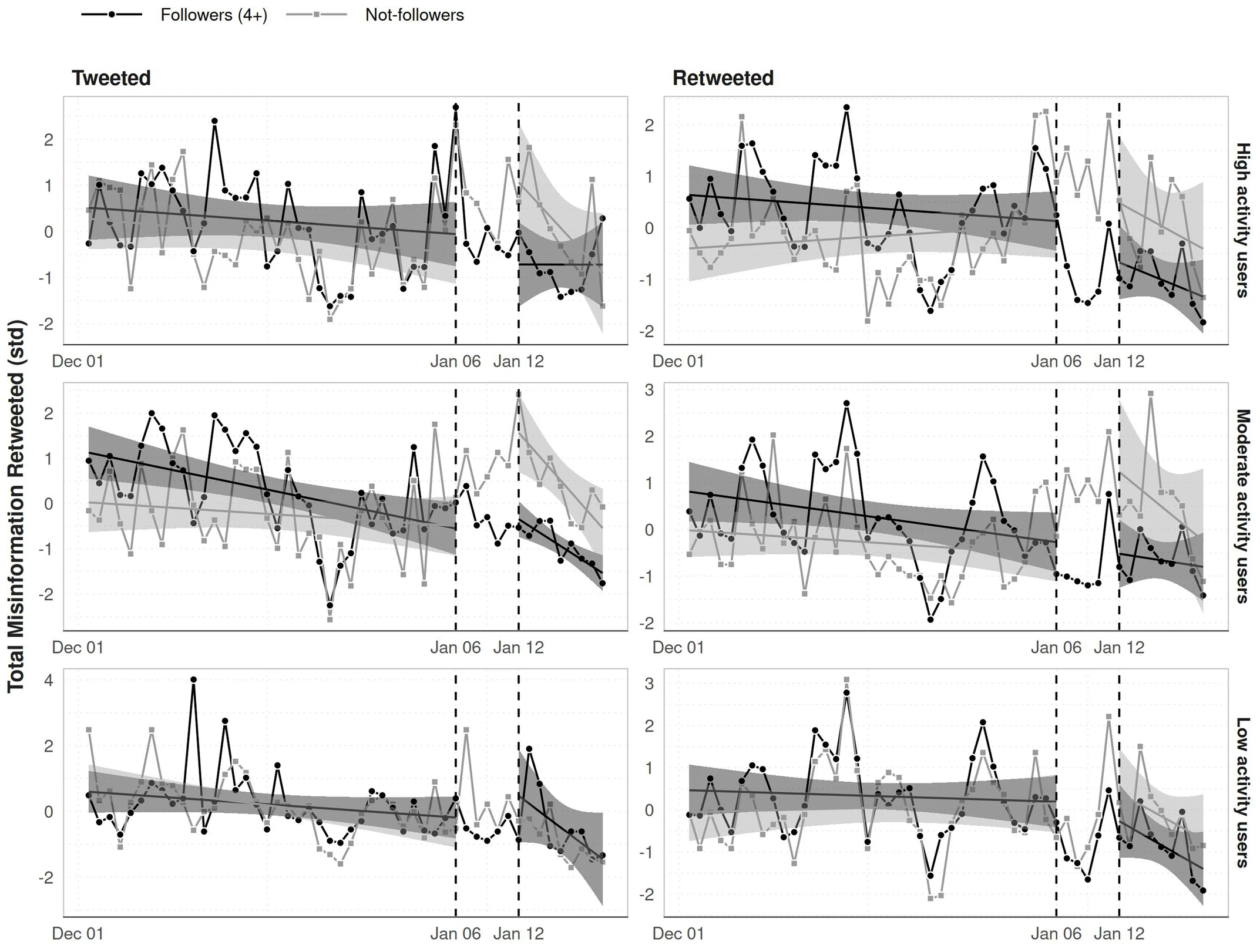

The mass suspension significantly reduced the sharing of linksto “low credibility” websites among Twitter users who followed the suspended accounts. It also led a number of other misinformation purveyors to leave the site voluntarily.

Social media content moderation has fallen out of favor in some circles, especially at X, where Musk has reinstated numerous banned accounts, including former president Donald Trump’s. But with the 2024 election approaching, the study shows that it is possible to rein in the spread of online lies, if platforms have the will to do so.

“There was a spillover effect,” said Kevin M. Esterling, a professor of political science and public policy at University of California at Riverside and a co-author of the study. “It wasn’t just a reduction from the de-platformed users themselves, but it reduced circulation on the platform as a whole.”

Twitter also famously suspended Trump on Jan. 8, 2021, citing the risk that his tweets could incite further violence — a move that Facebook and YouTube soon followed. While suspending Trump may have reduced misinformation by itself, the study’s findings hold up even if you remove his account from the equation, said co-author David Lazer, professor of political science and computer and information science at Northeastern University.

The study drew on a sample of some 500,000 Twitter users who were active at the time. It focused in particular on 44,734 of those users who had tweeted at least one link to a website that was included on lists of fake news or low-credibility news sources. Of those users, the ones who followed accounts banned in the QAnon purge were less likely to share such links after the deplatforming than those who didn’t follow them.

Some of the websites the study considered low-quality were Gateway Pundit, Breitbart and Judicial Watch. The study’s other co-authors were Stefan McCabe of George Washington University, Diogo Ferrari of University of California at Riverside and Jon Green of Duke University.

Musk has touted X’s “Community Notes” fact-checking feature as an alternative to enforcing online speech rules. He has said he prefers to limit the reach of problematic posts rather than to remove them or ban accounts altogether.

A study published last year in the journal Science Advances found that attempts to remove anti-vaccine content on Facebook did not reduce overall engagement with it on the platform. [more]

After Jan. 6, Twitter banned 70,000 right-wing accounts. Lies plummeted.

Post-January 6th deplatforming reduced the reach of misinformation on Twitter

ABSTRACT: The social media platforms of the twenty-first century have an enormous role in regulating speech in the USA and worldwide1. However, there has been little research on platform-wide interventions on speech2,3. Here we evaluate the effect of the decision by Twitter to suddenly deplatform 70,000 misinformation traffickers in response to the violence at the US Capitol on 6 January 2021 (a series of events commonly known as and referred to here as ‘January 6th’). Using a panel of more than 500,000 active Twitter users4,5 and natural experimental designs6,7, we evaluate the effects of this intervention on the circulation of misinformation on Twitter. We show that the intervention reduced circulation of misinformation by the deplatformed users as well as by those who followed the deplatformed users, though we cannot identify the magnitude of the causal estimates owing to the co-occurrence of the deplatforming intervention with the events surrounding January 6th. We also find that many of the misinformation traffickers who were not deplatformed left Twitter following the intervention. The results inform the historical record surrounding the insurrection, a momentous event in US history, and indicate the capacity of social media platforms to control the circulation of misinformation, and more generally to regulate public discourse.

Post-January 6th deplatforming reduced the reach of misinformation on Twitter