Limiting global warming to 2°C – Why Victor and Kennel are wrong

[For background, see 2 bold proposals emerge to change climate negotiations.] By Stefan Rahmstorf

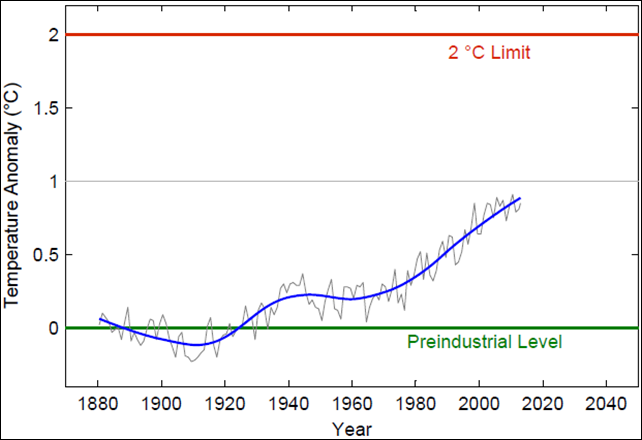

1 October 2014 (RealClimate) – In a comment in Nature titled “Ditch the 2°C warming goal”, political scientist David Victor and retired astrophysicist Charles Kennel advocate just that. But their arguments don’t hold water. It is clear that the opinion article by Victor & Kennel is meant to be provocative. But even when making allowances for that, the arguments which they present are ill-informed and simply not supported by the facts. The case for limiting global warming to at most 2°C above preindustrial temperatures remains very strong. Let’s start with an argument that they apparently consider especially important, given that they devote a whole section and a graph to it. They claim:The scientific basis for the 2°C goal is tenuous. The planet’s average temperature has barely risen in the past 16 years.

They fail to explain why short-term global temperature variability would have any bearing on the 2°C limit – and indeed this is not logical. The short-term variations in global temperature, despite causing large variations in short-term rates of warming, are very small – their standard deviation is less than 0.1°C for the annual values and much less for decadal averages (see graph – this can just as well be seen in the graph of Victor & Kennel). If this means that due to internal variability we’re not sure whether we’ve reached 2°C warming or just 1.9°C or 2.1°C – so what? This is a very minor uncertainty. (And as our readers know well, picking 1998 as start year in this argument is rather disingenuous – it is the one year that sticks out most above the long-term trend of all years since 1880, due to the strongest El Niño event ever recorded.) The logic of 2°C climate policy needs a “long-term global goal” (as the Cancun Agreements call it) against which the efforts can be measured to evaluate their adequacy. This goal must be consistent with the concept of “preventing dangerous climate change” but must be quantitative. Obviously it must relate to the dangers of climate change and thus result from a risk assessment. There are many risks of climate change (see schematic below), but to be practical, there cannot be many “long-term global goals” – one needs to agree on a single indicator that covers the multitude of risks. Global temperature is the obvious choice because it is a single metric that is (a) closely linked to radiative forcing (i.e. the main anthropogenic interference in the climate system) and (b) most impacts and risks depend on it. In practical terms this also applies to impacts that depend on local temperature (e.g. Greenland melt), because local temperatures to a good approximation scale with global temperature (that applies in the longer term, e.g. for 30-year averages, but of course not for short-term internal variability). One notable exception is ocean acidification, which is not a climate impact but a direct impact of rising CO2 levels in the atmosphere – it is to my knowledge currently not covered by the UNFCCC.

Once an overall long-term goal has been defined, it is a matter of science to determine what emissions trajectories are compatible with this, and these can and will be adjusted as time goes by and knowledge increases. Why not use limiting greenhouse gas concentrations to a certain level, e.g. 450 ppm CO2-equivalent, as long-term global goal? This option has its advocates and has been much discussed, but it is one step further removed from the actual impacts and risks we want to avoid along the causal chain shown above, so an extra layer of uncertainty is added. This uncertainty is that in climate sensitivity, and the overall range is a factor of three (1.5-4.5°C) according to IPCC. This would mean that as scientific understanding of climate sensitivity evolves in coming decades, one might have to re-open negotiations about the “long-term global goal”. With the 2°C limit that is not the case – the strategic goal would remain the same, only the specific emissions trajectories would need to be adjusted in order to stick to this goal. That is an important advantage.

2°C is feasible

Victor & Kennel claim that the 2°C limit is “effectively unachievable”. In support they only offer a self-citation to a David Victor article, but in fact they disagree with the vast majority of scientific literature on this point. The IPCC has only this year summarized this literature, finding that the best estimate of the annual cost of limiting warming to 2°C is 0.06% of global GDP (1). This implies just a minor delay in economic growth. If you normally would have a consumption growth of 2% per year (say), the cost of the transformation would reduce this to 1.94% per year. This can hardly be called prohibitively expensive. When Victor & Kennel claim holding the 2°C line is unachievable, they are merely expressing a personal, pessimistic political opinion. This political pessimism may well be justified, but it should be expressed as such and not be confused with a geophysical, technological or economic infeasibility of limiting warming to below 2°C. Because Victor & Kennel complain about policy makers “chasing an unattainable goal”, they apparently assume that their alternative proposal of focusing on specific climate impacts would lead to a weaker, more easily attainable limit on global warming. But they provide no evidence for this, and most likely the opposite is true. One needs to keep in mind that 2°C was already devised based on the risks of certain impacts, as epitomized in the famous “reasons of concern” and “burning embers” (see the IPCC WG2 SPM page 13) diagrams of the last IPCC reports, which lay out major risks as a function of global temperature. Several major risks are considered “high” already for 2°C warming, and if anything the many of these assessed risks have increased from the 3rd to the 4th to the 5th IPCC reports, i.e. may arise already at lower temperature values than previously thought. One of the rationales behind 2°C was the AR4 assessment that above 1.9°C global warming we start running the risk of triggering the irreversible loss of the Greenland Ice Sheet, eventually leading to a global sea-level rise of 7 meters. In the AR5, this risk is reassessed to start already at 1 °C global warming. And sea-level projections of the AR5 are much higher than those of the AR4. [more]

Limiting global warming to 2 °C – why Victor and Kennel are wrong

A physics-based equation, with only two drivers (both natural) as independent variables, explains measured average global temperatures since before 1900 with 95% correlation, calculates credible values back to 1610, and predicts through 2037. The current trend is down.

Search “AGWunveiled” for the drivers, method, equation, data sources, history (hind cast to 1610), predictions (to 2037) and an explanation of why CO2 is NOT a driver.